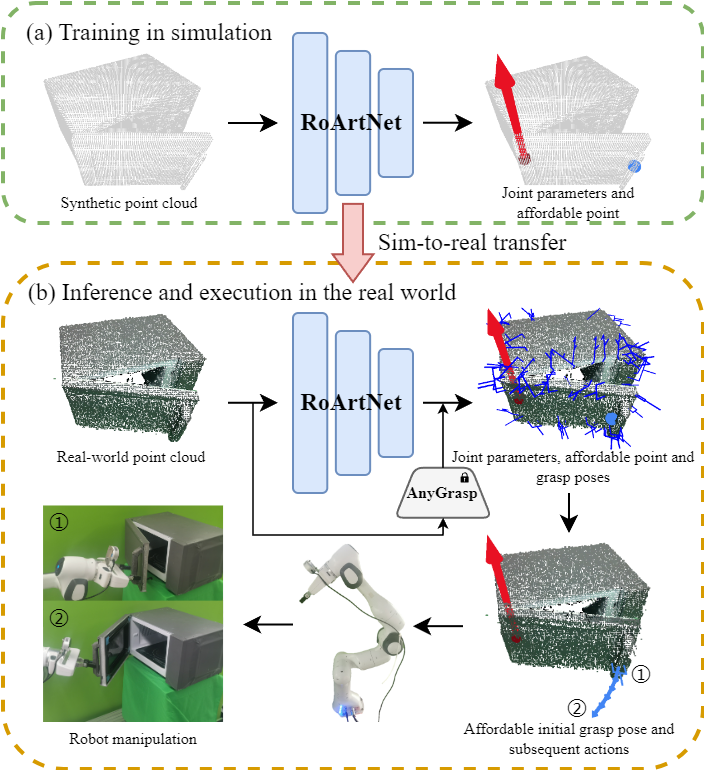

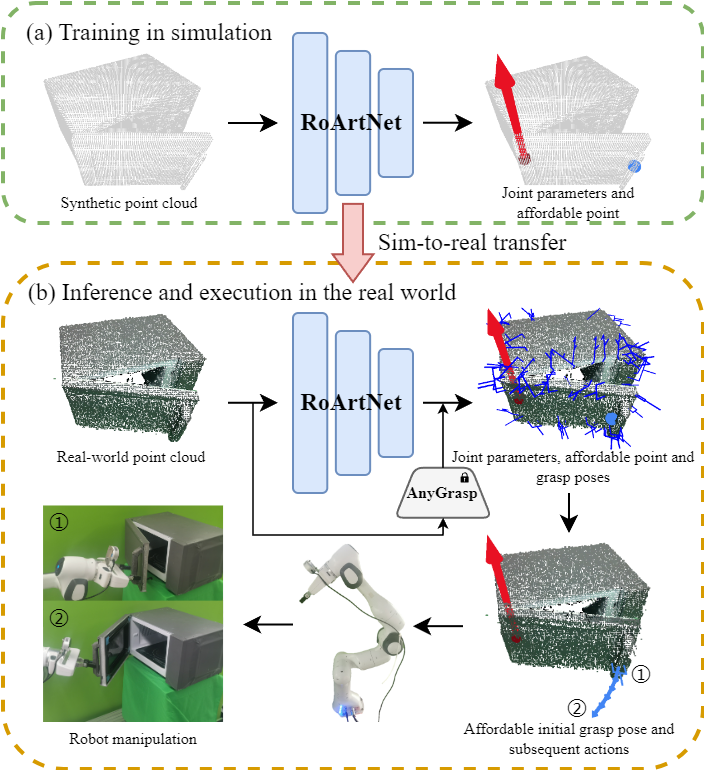

Framework

- Robust articulation network

- Input: single-view point cloud

- Output: joint parameters and affordable point

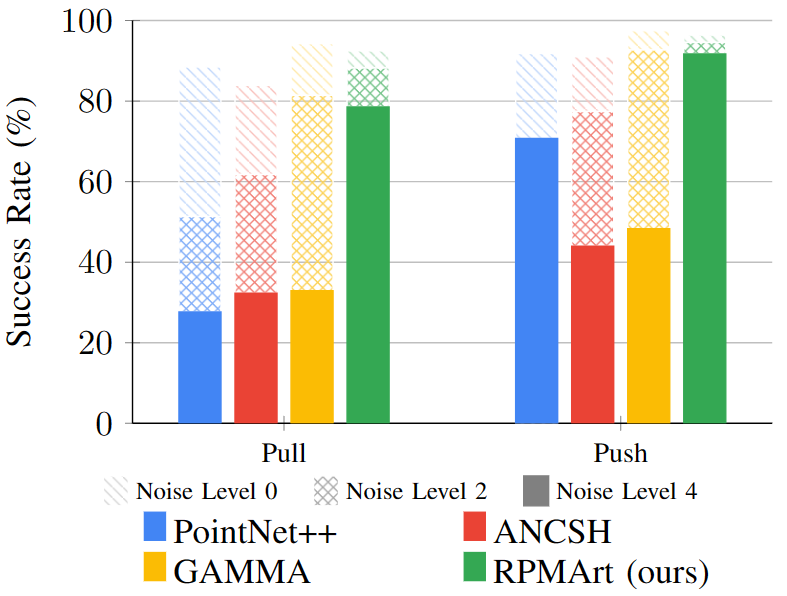

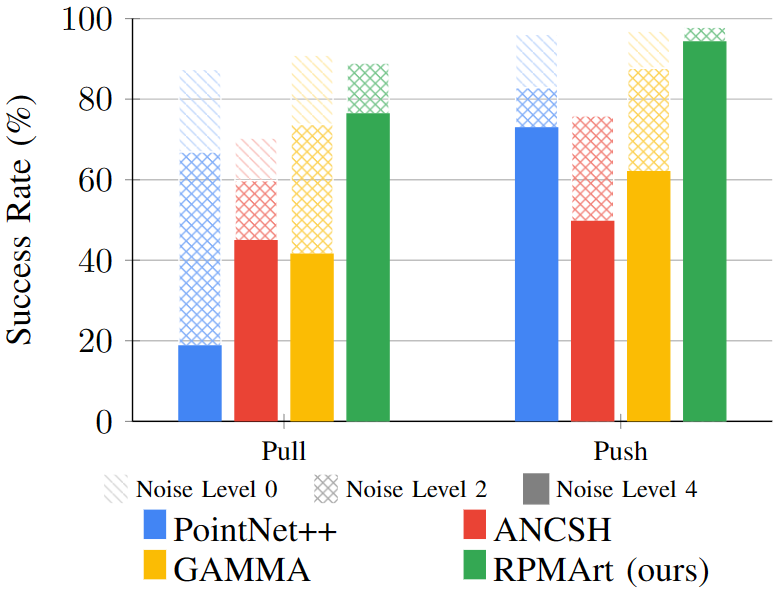

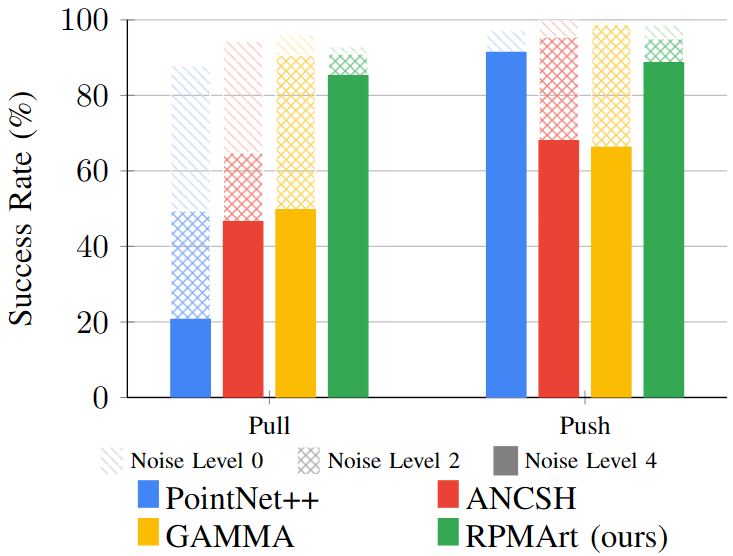

- Affordance-based physics-guided manipulation

- Affordable grasp pose selection

- Articulation joint constraint

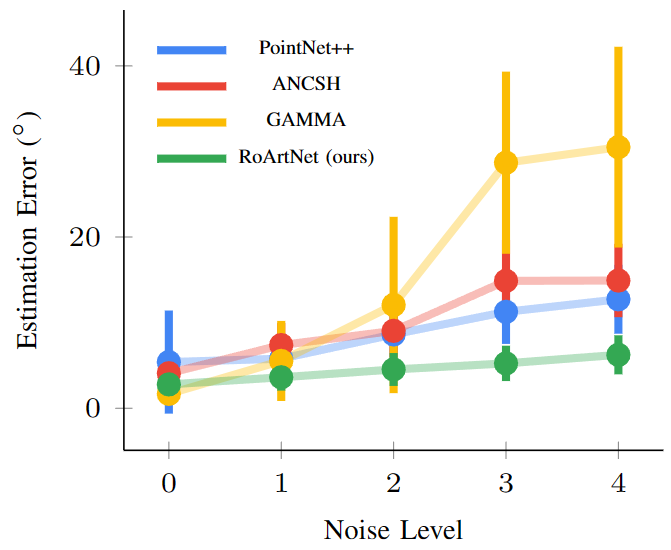

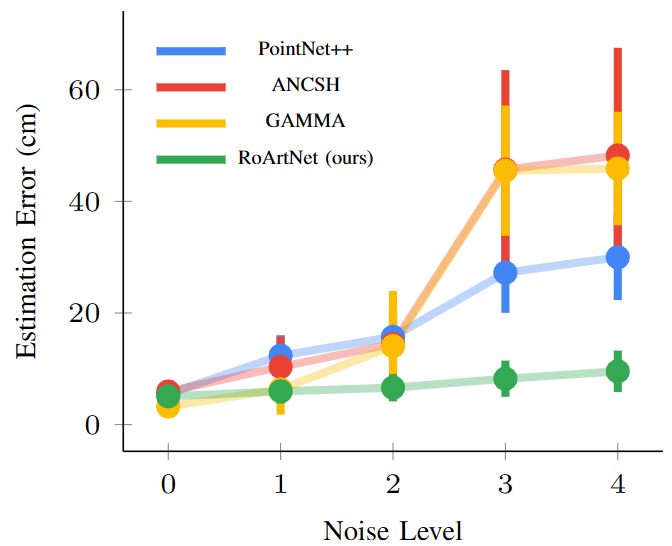

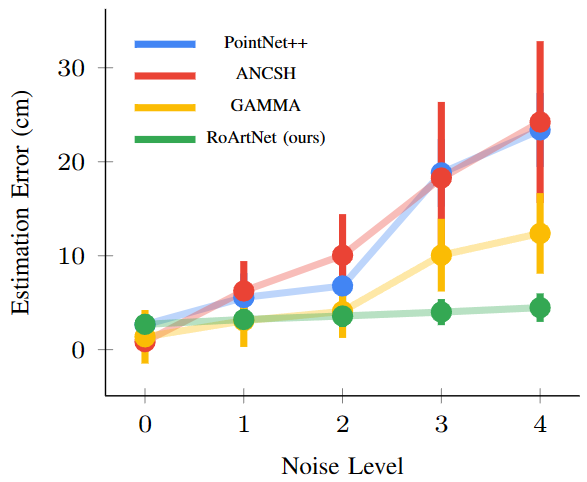

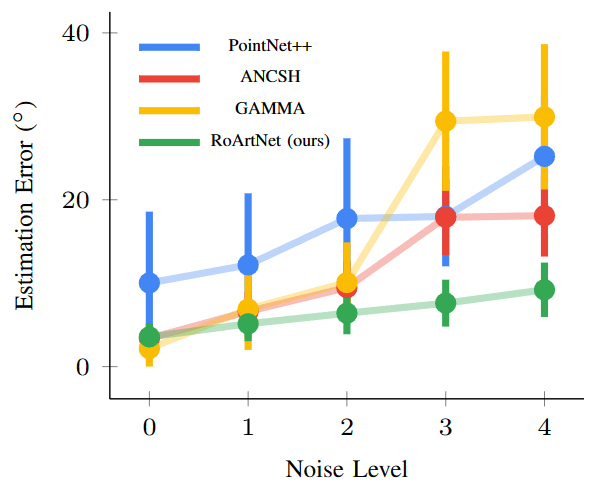

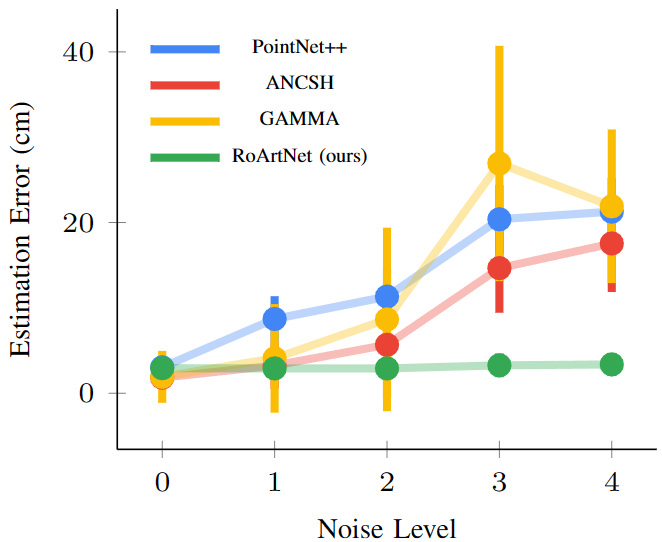

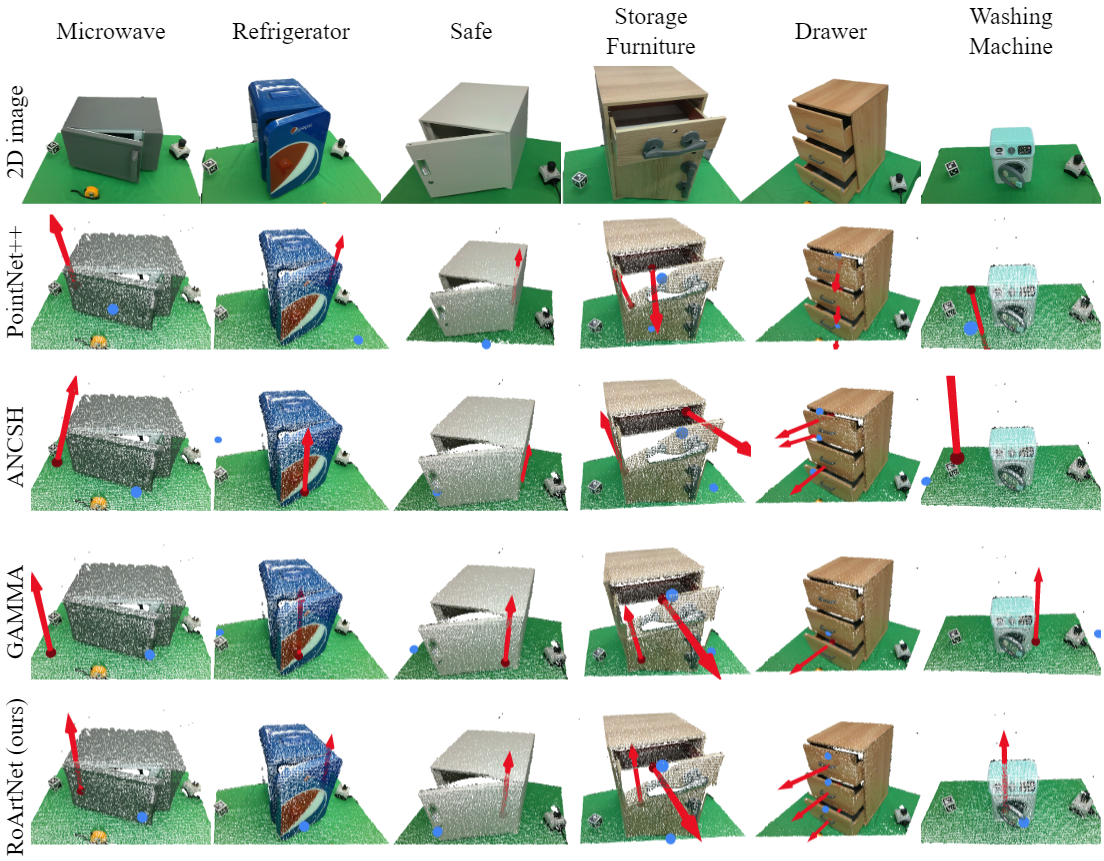

During training, several voting targets are generated by part segmentation, joint parameters and affordable points from the simulator to supervise RoArtNet. When given the real-world noisy point cloud observation, RoArtNet can still generate robust joint parameters and affordable points estimation by point tuple voting. Then, affordable initial grasp poses can be selected from AnyGrasp-generated grasp poses based on the estimated affordable points, and subsequent actions can be constrained by the estimated joint parameters.

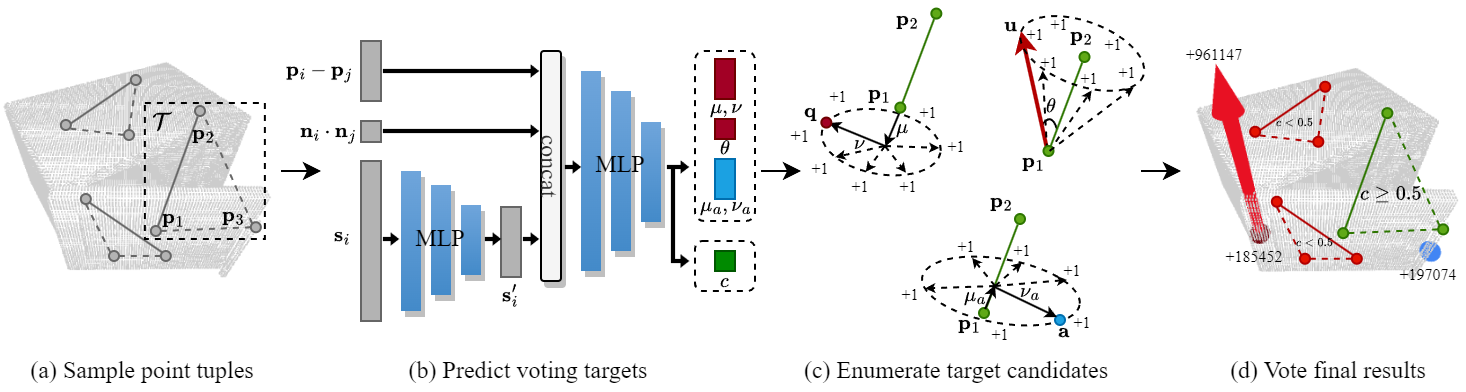

Our primary contribution is the robust articulation network, which is carefully designed to be robust and sim-to-real, by local feature learning, point tuple voting, and an articulation awareness scheme. First, a collection of point tuples are uniformly sampled from the point cloud. For each point tuple, we predict several voting targets with a neural network from the local context features of the point tuple. Further, an articulation score is applied to supervise the neural network so that the network is aware of the articulation structure. Then, we can generate multiple candidates using the predicted voting targets, given the one degree-of-freedom ambiguity constraint. The candidate joint origin, joint direction and affordable point with the most votes, from only point tuples with high articulation score, are selected as the final estimation.